ECE 4160 Fast Robots

Raphael Fortuna

Lab reports made by Raphael Fortuna @ rafCodes Hosted on GitHub Pages — Theme by mattgraham

Home

Lab 11: Localization on the real robot

Summary

In this lab, I merged lab 9 and 10 together to allow for the robot to rotate and collect ToF data and then use that data with the simulation and Bayes Filter to determine where the robot is most likely to be.

Prelab

We were given three new files – localization_extras that contained a Bayes Filter implementation for us to use, lab11_sim.ipynb that shows how the Bayes filter is run using the virtual robot, and lab11_real.ipynb provides a framework for communicating with the real robot and the simulation. I placed the .ipynb files into the notebooks folder and placed the localization_extras file with the other robot management files.

Running Simulation Task

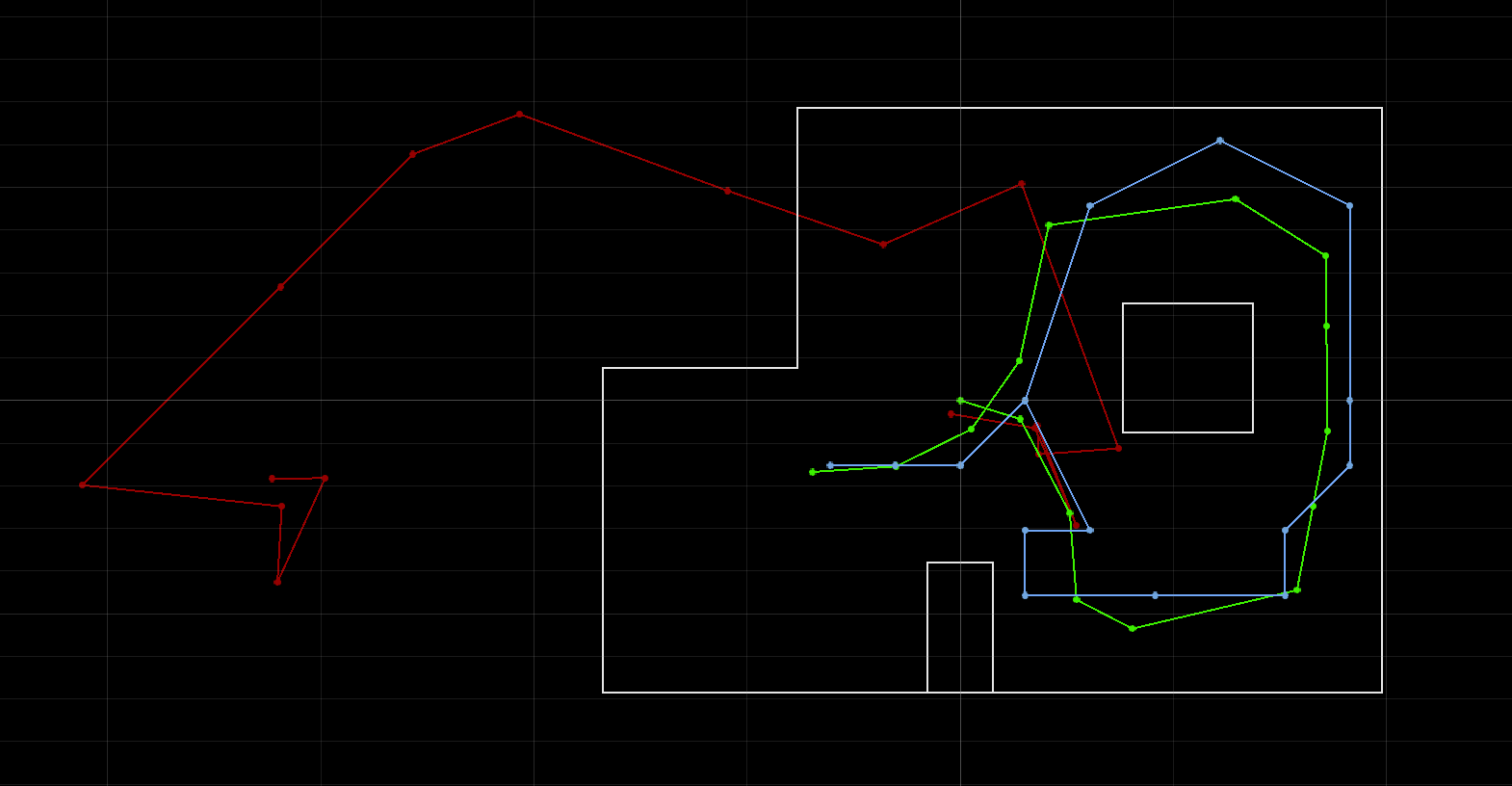

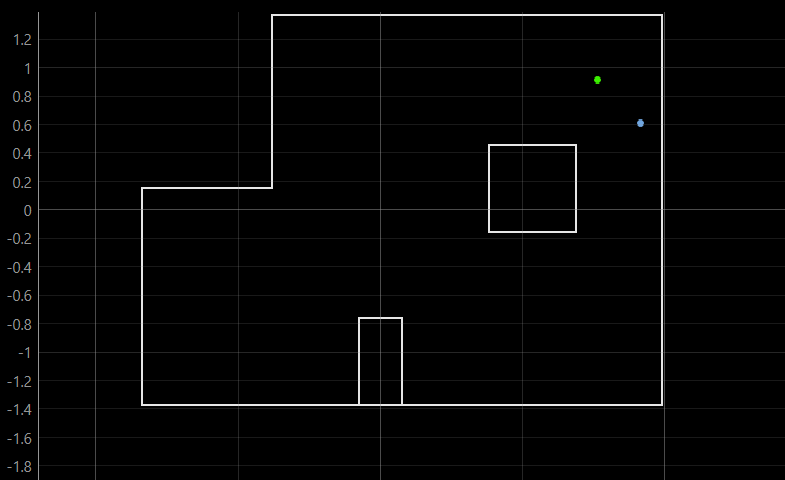

The first task was to run the simulation in lab11_sim.ipynb to see how the Bayes Filter works with the virtual robot. Here I ran the simulation from lab11_sim.ipynb to make sure it was working. A picture of the plot can be seen below with the green for the ground truth of the robot, blue for the predicted location from the bayes filter, and red for the odometry readings from the robot:

Update Step Coding Task

The next step was to add code to the lab11_real.ipynb. It had an observation loop function to be completed for the RealRobot class that would replace the VirtualRobot class. It needed to communicate with the robot using BLE and collect 18 ToF measurements during one rotation – one every 20 degrees. My code setup in the previous labs was using python scripts instead of Jupyter notebooks since I could not connect to the Arduino via bleak and BLE. I worked on transferring the simulation to scripts from the Jupyter notebook, but this was unsuccessful. So, I created a CSV file that would act as an intermediary between the two.

The python script code would connect to the robot and get the ToF data and write it to a CSV file. The Jupyter notebook would monitor this file’s modification time and wait until it had changed. Once it had been edited, the Jupyter notebook loaded the data from the CSV and processed the data using the Bayes Filter. For the next lab, I will add an additional file that the python script will monitor to send information back to the robot from the Jupyter notebook that the Jupyter notebook will write to. The code for this is below:

This worked really well, and I was able to get data from the robot. This only required making a modification for an extra file to be written to from the python script code from lab 9 and modifying the lab 9 Arduino code to collect 18 ToF measurements instead of 14.

Running the Update Step Task

I placed the robot in each of the four marked poses and ran the python script and Jupyter notebook at the same time. One thing to note, I made a modification in localization.py to normalize the angle that it output about the robot’s pose in the Bayes Filter since it was going out of the +/- 180 degree range. The code for that change is below:

I also had the robot point 90 degrees from the point where it is since I am still repairing the front ToF sensor and am using the side ToF sensor. I added an offset to the ToF data of 3 cm to account for the different location on the robot.

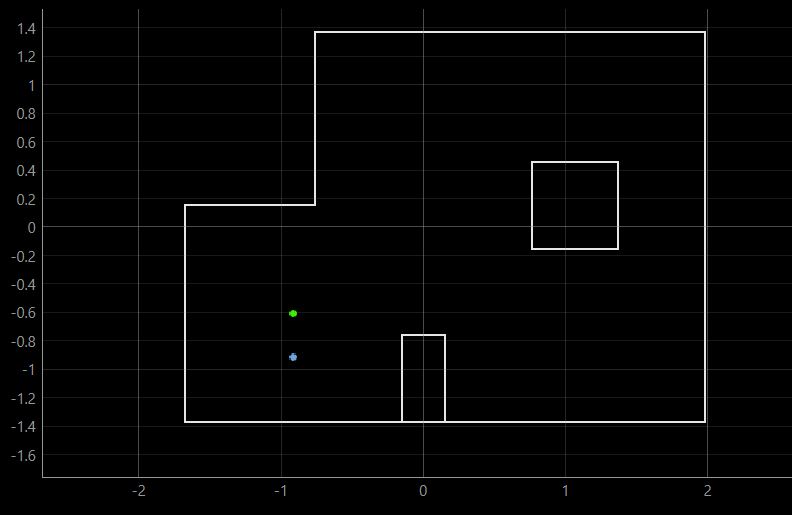

Update step for (-3 ft ,-2 ft ,0 deg) Task

-

Belief pose: (-0.914, -0.914, 110.000)

-

Ground truth pose: (-0.914, -0.610, 90.000)

-

Error: (-0.000, 0.305, -20.000)

The robot was able to localize very close to both the point it was at and the direction it was facing. There was some shifting in position while the robot was rotating in the -y direction and this might have led to the belief pose being shifted downwards.

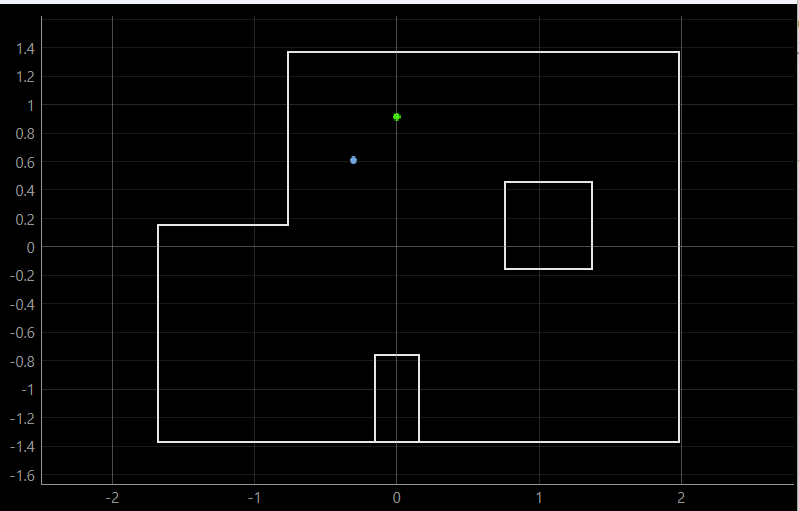

Update step for (0 ft,3 ft, 0 deg) Task

-

Belief pose: (-0.305, 0.610, 90.000)

-

Ground truth pose: (0.000, 0.914, 90.000)

-

Error: (0.305, 0.305, 0.000)

The position was slightly shifted downwards and to the left. The robot again had some translation while it was moving in a circle which could have led to this issue – even though it returned to the correct pose. The direction of the belief matched the ground truth.

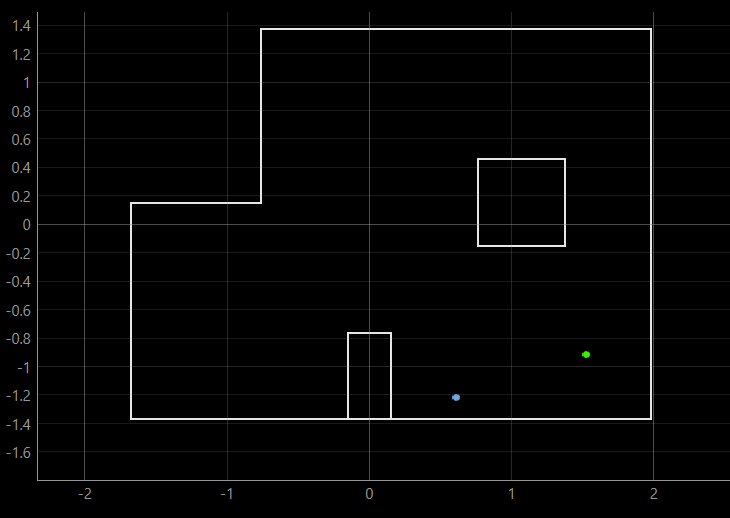

Update step for (5 ft,-3 ft, 0 deg) Task

-

Belief pose: (0.610, -1.219, 50.000)

-

Ground truth pose: (1.524, -0.914, 90.000)

-

Error: (0.914, 0.305, 40.000)

This point had the largest error of any of the other points and had both a positional and angular angle. This one had some translation as well, which may have led to it being closer to the wall at the end and appearing to be nearer the other box and collected data from the gap between the top box and the wall, thus making it seem like the large box was larger away as well. These two factors combined to give it a translation towards the smaller box and a angle error.

Update step for (5 ft,3 ft, 0 deg) Task

-

Belief pose: (1.829, 0.610, 90.000)

-

Ground truth pose: (1.524, 0.914, 90.000)

-

Error: (-0.305, 0.305, 0.000)

This one had nearly the same error as the second point but flipped over the y axis. There was again some translation while taking measurements which might have led to the translation in position for the belief. The angle had no error, again similar to the second point.